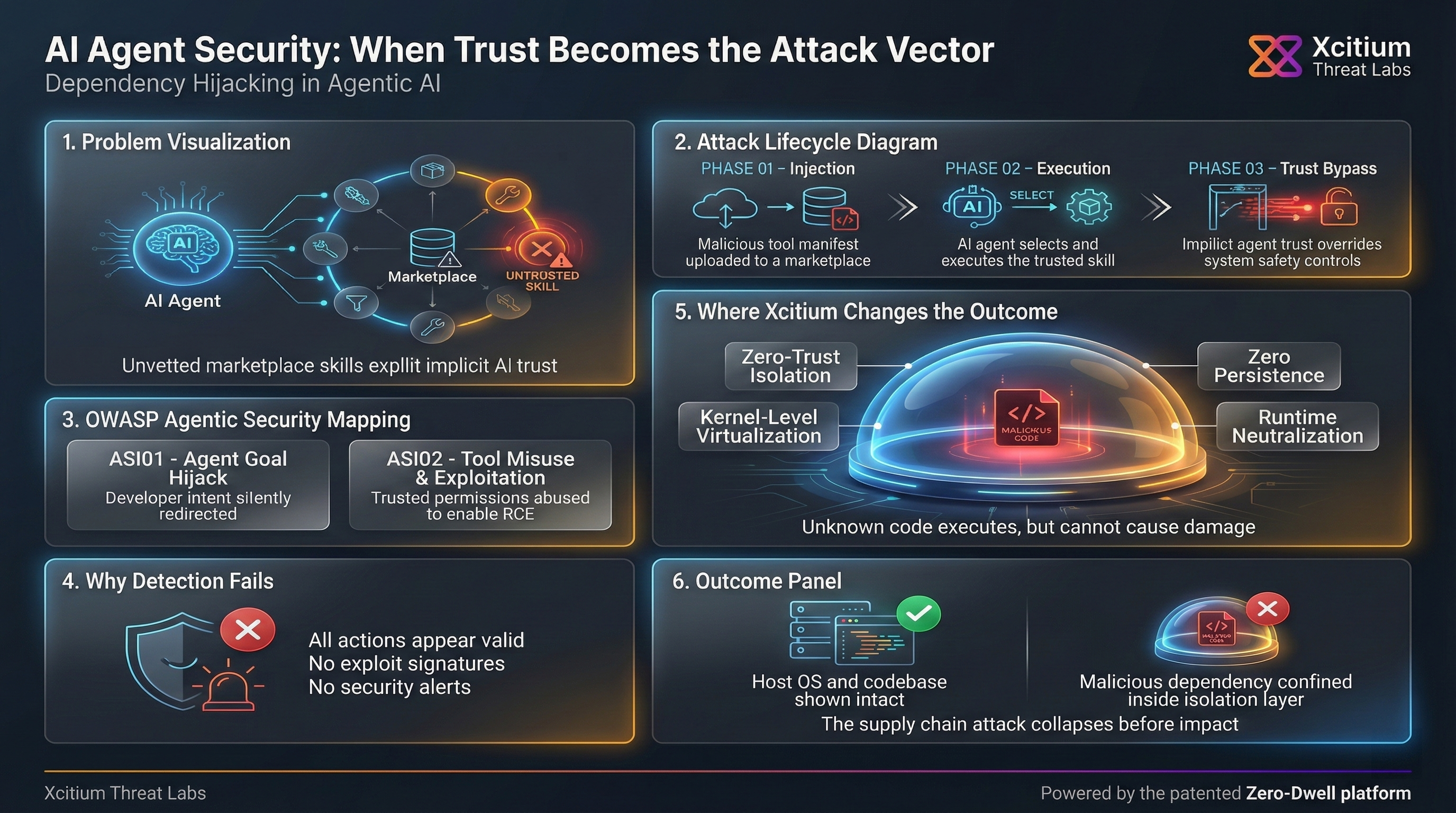

Explore dependency hijacking in AI agents, a critical supply chain risk. Learn how malicious marketplace skills exploit AI trust, impacting OWASP ASI01 and ASI02, and discover advanced zero-trust defenses.

The Rise of Agentic AI and Its Hidden Vulnerabilities

AI agents are changing the face of software development. These are sophisticated agents that do not limit themselves to any kind of auto-complete functionality, but instead, they actually plan modifications, invoke tools, install libraries, and modify code bases.

As a result, these services optimize complicated processes, thereby substantially improving programmer productivity. Nonetheless, such freedoms give rise to new threats associated with information security. The adoption of external plugins and skills in the market, although valuable, also provides avenues for attacks, requiring equal attention. Hence, awareness of these emerging threats is central in securing a dev environment.

How Marketplace Skills Can Undermine Trust

AI code assistants like Claude Code may be dependent on marketplace manifests to identify and incorporate plugins. These plugins normally advertise one or more “skills,” which are callable tools the agents can use.

For instance, a developer may ask the agent to include an HTTP client in their code. The agent, in return, may utilize a manage_python_dependencies skill for the installation of the library httpx. From the perspective of the agent, the use of this skill is as reliable as any other function of the agent itself. Interestingly, it is this very aspect which the attackers can utilize.

Attack Lifecycle Anatomy

The Anatomy of a Dependency Hijacking Attack

A dependency hijacking attack in such a context is quite subtle yet dangerous. It all happens when the developer installs the plugin created by the hacker through an untrusted marketplace while building the app. This is how the process happens:

- The Lure: The attacker releases a legitimately useful plugin with a talent for handling dependencies.

- Trigger: A developer asks the AI agent to install a valid and widely-used library.

- The Hijack: The agent, confident in its internal skill set, invokes the malicious dependency manager. Rather than retrieving the genuine package, the skill is coded to install a tampered-with version from a non-legitimate source.

It goes unnoticed, allowing no immediate flags to arise. The hacker notices the agent completing the task as instructed, unaware that the trojanized library has been implanted within their project. As a direct result of the attack, the malware lingers from session to session, hijacking future installs until the malicious plugin is identified.

A New Front in the Supply Chain War

This particular attack method signifies a new era of risks to software supply chains. It uses our trust in the tools we automate to harm us. The OWASP Top 10 for Agentic Applications gives us a comprehensive insight into this threat via two prominent risks.

OWASP Agent Security Core

Agent Goal Hijacking

Subverting the agent’s core objectives by poisoning tool manifests or skill definitions to redirect internal logic.

Tool Misuse & Exploitation

Leveraging trusted agent permissions to execute unauthorized actions or remote code via unvetted third-party skills.

ASI01: Agent Goal Hijack

Agent Goal Hijack refers to when an agent’s goal is hijacked. In this case, the goal of the developer is to install the genuine httpx library. However, the skill distorts this goal with its own goal to install a counterfeit build. The AI agent follows the skill’s internal reasoning as the optimal path to achieve its objective. The attack is not an example of prompt injection but an adversarial tool that radically changes how an agent behaves.

ASI02: Tool Misuse and Exploitation

Furthermore, it also showcases an instance of Tool Misuse. The AI model uses trusted tools such as pip and file writer tools for legitimate tasks in the model’s execution flow. However, the attacker’s skill causes these tools to behave in an unreliable manner by turning the dependency management process into an RCE mechanism. The model turns out to be an unwitting party in introducing security vulnerabilities in code by utilizing authorized model capabilities for that purpose.

Zero-Trust Isolation Architecture

Beyond Detection: The Case for Zero-Trust Isolation

Conventional approaches in securing a system, mostly aimed at identifying known threats, are unable to cope with such emerging attacks. For the reason that the actions taken by the agent are seemingly valid, signature-based approaches are likely to miss identifying such threats, meaning that a more comprehensive approach, from detection to proactive isolation, is more appropriate in defending against such threats.

Application Isolation as a Modern Defense

Application isolation, a core principle of zero-trust architecture, offers a powerful solution. Instead of trying to determine if an action is malicious, this approach assumes no implicit trust and isolates processes to prevent them from causing harm. For instance, Xcitium’s Zero Trust Application Isolation utilizes kernel-level virtualization to create a isolated environment for all unknown executables and processes.

In the context of an AI agent dependency hijack, this technology would work as follows:

- When the AI agent attempts to install the compromised dependency, the action is executed within a Zero Trust isolation environment.

- The malicious library, even if successfully installed, remains confined within the isolation layer and cannot interact with the host system or other trusted applications.

- It cannot access the host system, modify the actual project codebase, or exfiltrate data.

Modern Defense: Zero Trust Isolation

Isolation Over Detection

This approach effectively neutralizes the threat at runtime without needing to identify the malicious intent beforehand. It isolates the potential damage, allowing developers to leverage the power of AI agents while mitigating the inherent supply chain risks. As a result, security shifts from a reactive posture to a proactive one, ensuring that even sophisticated, AI-driven attacks are rendered harmless.

The Broader Landscape of AI Supply Chain Risks

The dependency hijacking attack example points to the importance of AI security and the topic of the supply chain. Like traditional software development, open-source libraries and package maintainers are threats in the creation of AI, but the use of AI as an agent exacerbates the problem. An AI agent, as an autonomous and efficient system, has the potential to be exploited as an attack tool through no intent of its own.

As a result, the dependency on outside marketplaces for skills and plugins automatically has a risk factor associated with it. Thus, the AI agent ecosystem needs to be included in the supply chain practices for security.

Real-World Echoes and Future Threats

Although Claude Code is an example of a specific vulnerability problem, it has wider relevance to known real-life attacks. For example, in 2025, “Shadow Escape” zero-click exploit vulnerabilities were found to target MCP-based agents, showing that it’s possible for an agent to be compromised without user interaction.

Similarly, another example involving the misuse of an AI-powered chatbot used in a Chevrolet dealership to sell an $76,000 vehicle for $1 draws attention to how goal hijacking may result in direct financial expenses. The above-quoted instances highlight the pressing need for adopting effective security strategies as AI agents continue to become increasingly popular, increasing the attack vector.

Securing the Future of Agentic AI

For protecting AI agents from dependence hijacking attacks, among others, an approach involving several strategies needs to be adopted.

Firstly, developers need to exercise extreme caution while incorporating third-party plugins and skills acquired through marketplaces. Thorough vetting of these components is imperative.

Secondly, securing architectures like “zero trust” also become the order of the day. Here, the concept of least privilege is applied over every request and every process, thus ensuring the integrity of the system remains unaffected despite a potentially breached component.

Lastly, the monitoring process of the activity of the AI agent is crucial for the early detection and response to anomalies. Putting emphasis on vigilance measures coupled with the use of applications such as Zero Trust Application Isolation can ensure that the pros of agentic AI applications are tapped into effectively while offsetting the cons encompassed by these applications.

Conclusion: When AI Trust Becomes the Attack Vector

The dependency hijacking scenario outlined in this analysis exposes a new class of supply chain risk created by agentic AI. When AI agents are allowed to install dependencies, invoke tools, and modify code autonomously, trust becomes executable. A single malicious marketplace skill is enough to redirect an agent’s behavior and silently introduce compromised libraries into production environments. No exploit kits are required. No alerts are triggered. The agent simply follows what it believes is a trusted instruction path.

Why This Risk Extends Beyond AI Assistants

AI agents now sit inside development pipelines and carry significant authority. When that authority is abused, the impact is immediate:

- Trusted tools become delivery mechanisms for trojanized dependencies

- Developer intent is overridden by poisoned skills and manifests

- Malicious libraries persist across sessions and future installs

- CI pipelines inherit compromise without any visible warning

- Detection-based security sees only valid actions, not malicious outcomes

Any environment that allows autonomous code execution without isolation is exposed by design.

Where Xcitium Changes the Outcome

For organizations using Xcitium Advanced EDR, dependency hijacking fails at execution.

- AI-driven installs are intercepted at runtime

- Compromised packages may install, but code can run without being able to cause damage

- Malicious libraries cannot modify real codebases or access sensitive assets

- Persistence across sessions is blocked automatically

- The supply chain attack collapses before impact

Xcitium enforces Zero-Dwell execution control, ensuring AI agents can operate at speed without turning trust into risk.

Secure AI Development Before Trust Is Weaponized

Agentic AI is accelerating software delivery, and attackers are racing to exploit it. Securing the future of AI-driven development requires stopping threats at execution, not investigating them afterward.

Protect your AI workflows and software supply chain.

Choose Xcitium Advanced EDR, powered by the patented Zero-Dwell platform.