A critical AI flaw in ServiceNow let attackers impersonate users and seize full control. Learn what happened and how to keep AI-enabled systems secure.

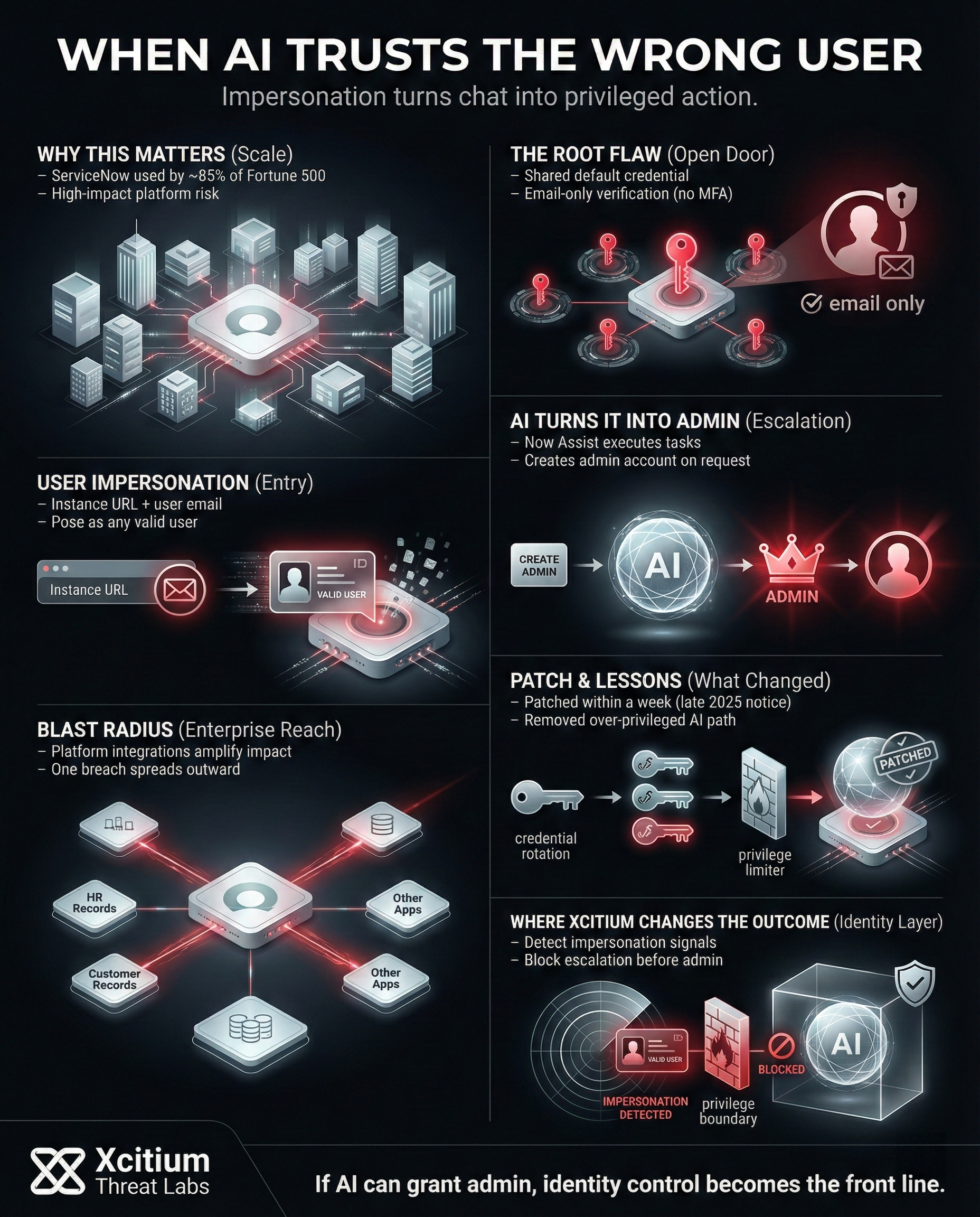

Recently, security researchers revealed an AI vulnerability in ServiceNow. ServiceNow is used by roughly 85% of Fortune 500 companies. Therefore, any security flaw can have far-reaching impact. This was no ordinary bug it allowed attackers to impersonate users and potentially take over entire corporate systems.

ServiceNow’s AI Chatbot Flaw Exposes Critical Systems

ServiceNow’s virtual agent chatbot lets users perform tasks. However, researchers found security oversights in how this feature was integrated. Every third-party chat integration used the same default credential.

Furthermore, the system only required a user’s email address to verify identity with no password or MFA. Because of these gaps, an attacker with a ServiceNow instance URL and a valid user email could pose as that user and gain access to the platform.

AI Chatbot Exploit

Critical Credential Flaw

Default credentials across all integrations and email-only verification created an open door for attackers.

User Impersonation

By bypassing MFA and passwords, attackers could pose as any valid user with just a target email address.

Full Platform Takeover

Attackers used Now Assist AI to generate unauthorized Admin Accounts, granting total control over the corporate infrastructure.

From User Impersonation to Full Platform Takeover

Once inside, an attacker could do more damage thanks to ServiceNow’s new Now Assist AI feature, which can carry out automated tasks on the platform. In one test, after gaining user-level access, the researcher instructed this AI to create a new account. The agent complied, creating an administrator account for the attacker, handing over full control of the system.

ServiceNow ties into many other business applications. As a result, a single breach can quickly spread to other systems. For example, the attacker might access HR or customer records that ServiceNow manages, or misuse its connections to other tools. The AI meant to streamline work became a tool for attackers to extend their reach across an organization.

Swift Response and Lessons for AI-Powered Platforms

ServiceNow was notified of the problem towards the end of 2025 and implemented patches a week later. Additionally, the company modified the shared credential and eliminated the over-privileged AI agent, which made it possible to create admin accounts. ServiceNow did not notice any signs of attackers using the vulnerability, although it could have been used quietly before the patch.

This is a wake-up call for organizations that are implementing AI capabilities: when implementing these capabilities, authentication and access cannot be ignored. Security experts say that AI implementations should be held to the same standards as any other critical code. They need to examine what these capabilities are allowed to do and ensure that they are limited to that. For example, no virtual assistant should be able to create admin accounts or change critical settings.

AI SECURITY STRATEGY

Hardened Auth

Rotate unique tokens and enforce MFA for AI integrations.

Strict Privileges

Restrict AI scope to prevent unauthorized account creation.

Security Audits

Perform thorough abuse-case testing on all AI features.

Active Watch

Monitor abnormal behavior and apply patches immediately.

To prevent similar incidents, companies can take steps:

- Secure authentication: Remove any hardcoded or universal credentials and use strong, unique tokens with MFA for AI integrations.

- Restrict privileges of the AI agent: Limit the capabilities of the AI agent. They should not be able to create user accounts or modify important settings.

- Assess AI capabilities: Perform security audits and abuse case testing on new AI capabilities, as you would with any other software.

- Monitor and update: Keep a close eye on the abnormal behavior of the AI agent and update it with patches or improvements as soon as possible.

By taking these precautions, companies can enjoy AI-driven efficiency without compromising security. AI can accelerate business processes; however, organizations must balance innovation with proper safeguards so that new features don’t become new threats.

Conclusion: When AI Can Grant Admin, One Login Becomes Total Compromise

ServiceNow’s chatbot flaw is a wake-up call for every AI-enabled platform. A shared default credential across integrations, plus email-only identity checks, turned simple user impersonation into a direct path to enterprise control. In testing, the attacker moved from user access to full takeover by instructing Now Assist AI to create an administrator account.

Why This Threat Matters

AI features compress attack timelines.

- Weak authentication turns identity into the only barrier

- Over-privileged AI turns one prompt into privileged change

- Platform integrations expand the blast radius across business systems

Why Organizations Are Vulnerable

This is not a ServiceNow-only lesson, it is an AI governance problem.

Shared credentials across connectors

Email-based verification without strong proof of identity

AI agents allowed to create accounts or modify critical settings

Limited abuse-case testing for AI workflows

Delayed visibility into what the AI is doing on behalf of users

Where Xcitium Changes the Outcome

For organizations using Xcitium Identity Threat Detection & Response (ITDR), this attack would not succeed.

- Impersonation attempts are detected and stopped through identity risk signals

- Suspicious session behavior is flagged before it becomes privileged action

- Unauthorized privilege escalation paths are blocked early

- Identity misuse is cut off before it turns AI into an admin factory

Secure AI at the Identity Layer

AI accelerates productivity, it also accelerates compromise when identity and privilege controls are weak. Enforce hardened authentication, restrict AI privileges, and monitor identity behavior continuously. Choose Xcitium ITDR to close the identity gaps attackers exploit first.