Deepfakes, powered by sophisticated machine learning algorithms, can create hyper-realistic fake videos and audio recordings, making it increasingly difficult to distinguish between reality and manipulation.

As deepfake technology becomes more accessible, its implications stretch far beyond entertainment and novelty. From misinformation campaigns to identity theft, the misuse of deepfakes poses a serious challenge to individuals, businesses, and even national security. In this article, we will explore how deepfakes work, their real-world consequences, and how you can protect yourself from being deceived.

How Do Deepfakes Work?

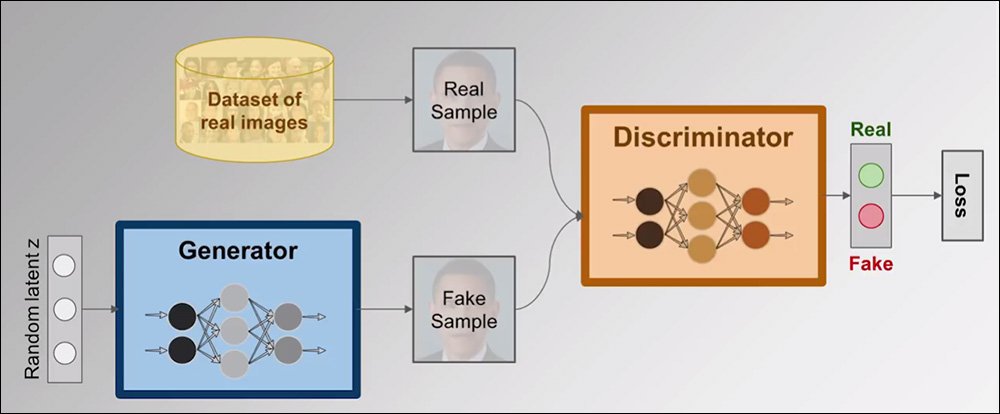

Deepfake technology is built upon Generative Adversarial Networks (GANs), a type of AI framework where two neural networks—one generating fake content and the other detecting it—compete against each other. Over time, the generator learns to create increasingly convincing fakes, making detection significantly harder.

In the process, thousands of images and videos of a target individual are fed into an AI model, which then learns the person’s facial features, voice patterns, and mannerisms. This data allows the AI to manipulate existing footage or create entirely synthetic media that appears authentic.

Real-World Implications of Deepfake Technology

1. Political Misinformation and Propaganda

Deepfakes have been weaponized in political arenas to manipulate public perception. Imagine a video showing a world leader making controversial statements—only to later be revealed as entirely fabricated. This capability has the potential to influence elections, incite unrest, and damage reputations.

2. Cybercrime and Identity Theft

Hackers are now using deepfake technology to impersonate individuals in video calls, phone conversations, and even social media posts. This technique, known as “face-swapping fraud,” has enabled criminals to bypass biometric authentication systems and scam unsuspecting victims.

3. Corporate Espionage and Fraud

Financial institutions and businesses are particularly vulnerable to deepfake-related fraud. For example, cybercriminals have used AI-generated voices to impersonate CEOs, tricking employees into transferring large sums of money. These “synthetic identity fraud” cases are rapidly increasing in sophistication.

4. Defamation and Privacy Violations

Individuals, especially celebrities and public figures, have fallen victim to deepfake-based defamation. Fake videos can be used to tarnish reputations, extort money, or spread false narratives. Worse, deepfake pornography has emerged as a disturbing trend, affecting countless victims worldwide.

How to Detect Deepfakes

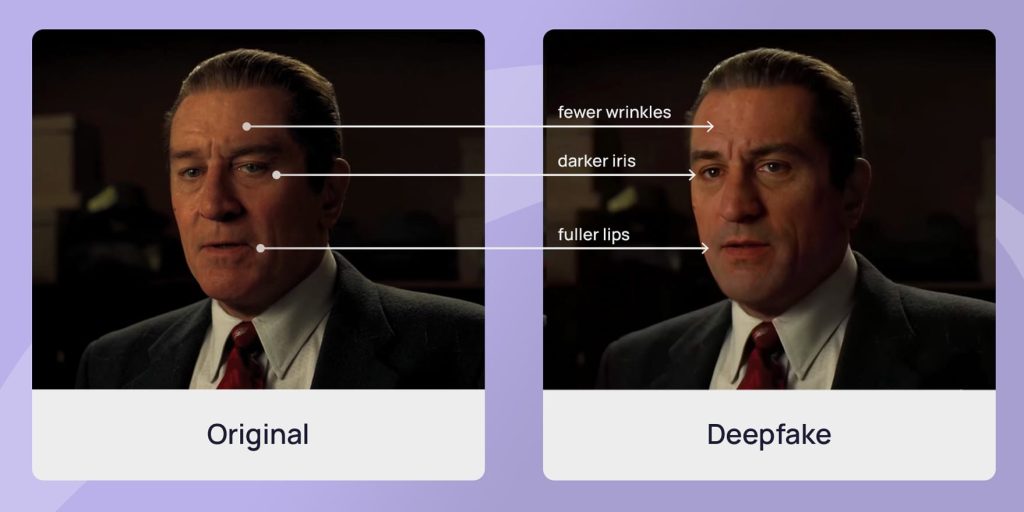

While deepfake technology continues to improve, several indicators can help in spotting fake content:

- Unnatural Facial Expressions: AI struggles to replicate microexpressions accurately.

- Inconsistent Blinking Patterns: Many deepfake models fail to mimic natural eye movements.

- Unusual Lighting or Shadows: Mismatched lighting effects can indicate manipulation.

- Lip-Syncing Issues: Slight discrepancies between spoken words and lip movements are common in deepfake videos.

- Artifacts and Blurring: Poorly generated deepfakes may contain blurry edges or distortions around the face.

Combating the Deepfake Threat

1. AI-Powered Detection Tools

Several organizations have developed AI models designed to identify deepfakes. Tools such as Microsoft’s Video Authenticator and Deepware Scanner analyze videos for signs of manipulation. Researchers are also working on blockchain-based verification systems to certify authentic content.

2. Regulation and Legal Measures

Governments worldwide are beginning to address deepfake-related threats through legislation. The U.S. Deepfake Detection Act and the European Union’s AI regulations are examples of efforts to combat synthetic media misuse.

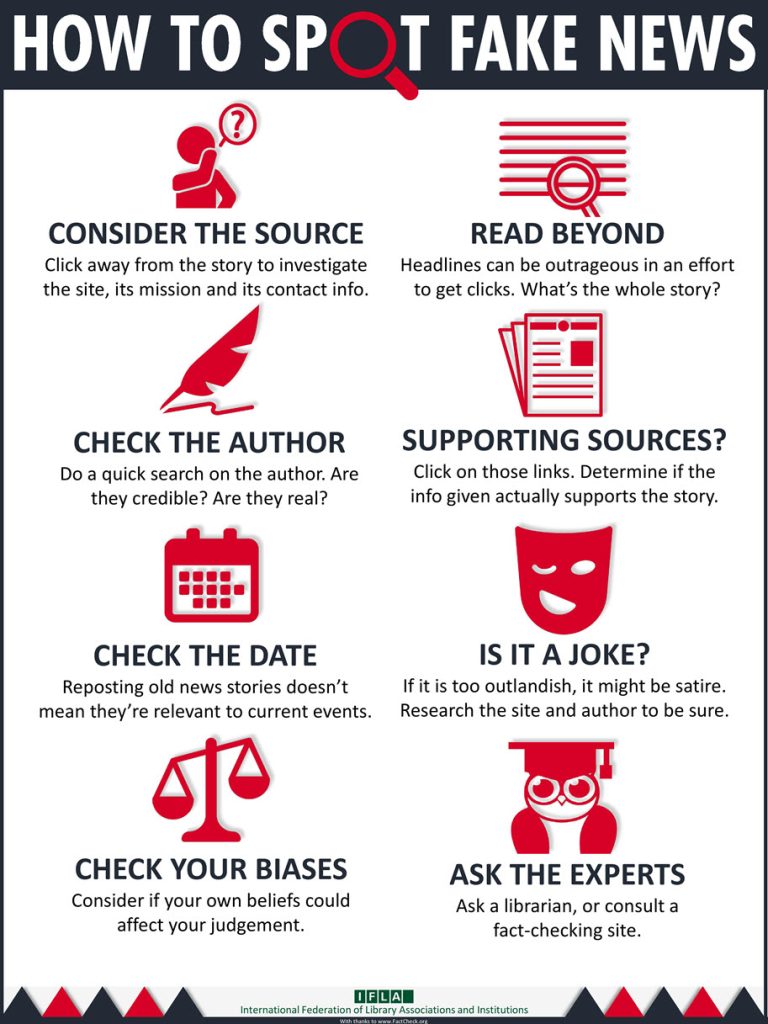

3. Public Awareness and Media Literacy

One of the best defenses against deepfakes is education. The more people understand how deepfakes work, the less likely they are to fall for misinformation. Fact-checking sources and verifying content from reputable outlets can help mitigate the spread of deceptive media.

Deepfake technology represents both an incredible advancement in AI and a significant threat to truth and security. As these synthetic media tools become more sophisticated, individuals and organizations must remain vigilant against their misuse. By leveraging AI-powered detection tools, advocating for stricter regulations, and promoting digital literacy, we can work towards safeguarding our information ecosystem from deepfake manipulation.

In a world where seeing is no longer believing, critical thinking and skepticism are more important than ever.